Docker Compose In Practice

Continue to advance to Kubernetes, the last article Docker Swarm In Action, After understanding Swarm, it is necessary to get familiar with Docker Compose. Docker Swarm forms a cluster of Docker hosts. As long as the Manager node is notified when the service is deployed, it will automatically find the corresponding node to run containers. Compose is another concept entirely. It organizes multiple associated containers into a whole for deployment, such as a load balance container, multiple web containers, and a Redis cache container to make up a whole bundle.

Revealed by its name, Compose, the concept of container orchestration was officially established. Later, Kubernetes, which we will learn, it's a tool for higher-level organization, operation and management of containers. Because Compose organizes the containers, it can start multiple associated containers with one command, instead of starting one container separately.

Regarding the installation of Docker Compose, Docker Desktop under Mac OS X comes with docker-compose; since Docker Compose is written in Python, we can use

The differences between Docker Container, Swarm, and Compose show in this picture.

Compose consists of multiple containers, which can be deployed as a whole to a single Docker host or Swarm host cluster.

Compose consists of multiple containers, which can be deployed as a whole to a single Docker host or Swarm host cluster.

Let’s experience the functions of Docker Compose and design a service with the following containers

The files involved in defining Docker Compose are Dockerfile and docker-compose.yml. We need to create a Dockerfile for each custom Docker container. If one container directly use the Docker image, the no Dockerfile required. In other words, there can be one or more Dockerfiles in a Compose. Here is an example of directory structure of one compose

myapp/proxy/Dockerfile

myapp/proxy/haproxy.cfg

This is the simplest haproxy.cfg configuration file. It just forwards the request to

myapp/web/Dockerfile

myapp/web/requirements.txt

myapp / web / app.py

Use a simple Flask App to demonstrate a web application. The access count is saved in Redis, and responds the hostname of the machine where the request is currently processed.

myapp/docker-compose.yml

We have built two Docker images. Redis:alpine is used directly for the image. The port and mapping are defined here, so EXPOSE is needed to define the port number in the Dockerfile. We will start two web services, web_a, web_b, and the haproxy.cfg in front will forward the request to these two containers.

Now we can go to the myapp directory under the command line and execute the command

If first time run, this command will also build Docker images for myapp_proxy, myapp_web_a, and myapp_web_b, and then they will be started. If those images already exist locally, start those containers directly. After the startup is complete, run

Four containers are all started, one haproxy, two web, and one redis, which is exactly what we need. Let’s verify the whole service behavior now:

Load balancing, web services, and Redis are working together as our intention.

If we run

please run

There is a simpler way to support HAProxy + multiple dynamic web containers

Modify the previous

proxy selected

Now start with the same command

There is only one web container, and now it can be dynamically expanded, using

The three requests were processed by three different web containers.

Note:

About running Compose on the Swarm cluster

It seems a bit troublesome, see the official Use Compose with Swarm . We need to use docker stack to deploy Compose. We won't do the actual operation for now, and not sure if there are many such combination scenarios in a real production environment. The key is to understand how the containers are distributed among the Swarm nodes after deployment. Is Compose still kept as a whole? or extract containers from Compose to deploy to Swarm cluster?

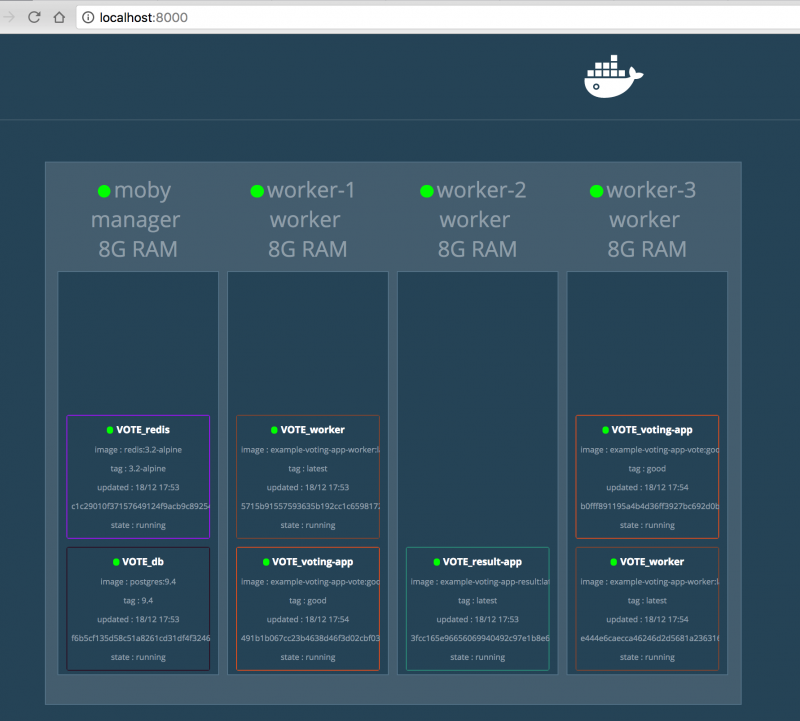

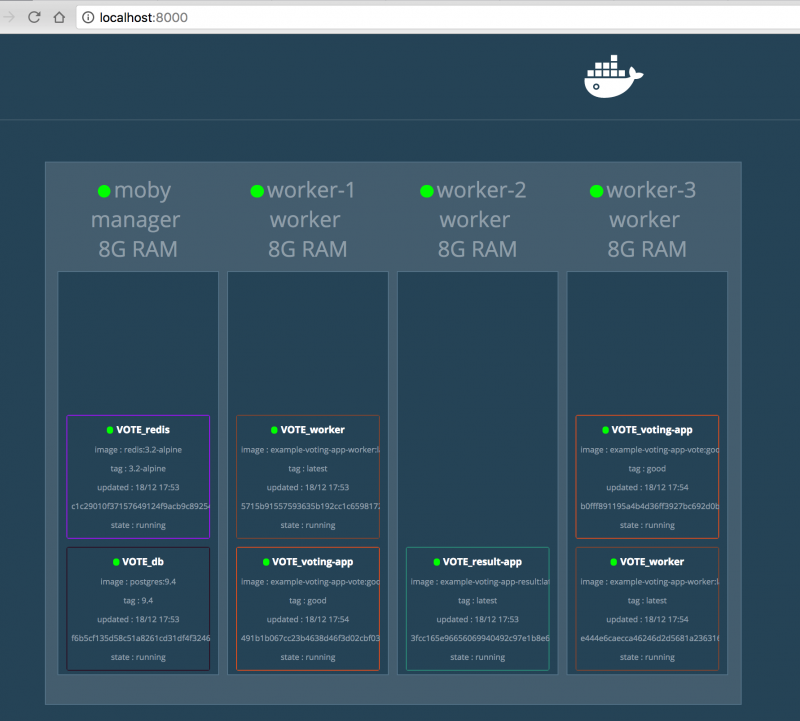

From a picture in Deploy Docker Compose (v3) to Swarm (mode) Cluster

From above picture, Swarm does extract container from a Compose, then deploy each container to Swarm cluster instead of treating Compose as a whole.

From above picture, Swarm does extract container from a Compose, then deploy each container to Swarm cluster instead of treating Compose as a whole.

link:

[版权声明] 本文采用 署名-非商业性使用-相同方式共享 4.0 国际 (CC BY-NC-SA 4.0) 进行许可。

本文采用 署名-非商业性使用-相同方式共享 4.0 国际 (CC BY-NC-SA 4.0) 进行许可。

Revealed by its name, Compose, the concept of container orchestration was officially established. Later, Kubernetes, which we will learn, it's a tool for higher-level organization, operation and management of containers. Because Compose organizes the containers, it can start multiple associated containers with one command, instead of starting one container separately.

Regarding the installation of Docker Compose, Docker Desktop under Mac OS X comes with docker-compose; since Docker Compose is written in Python, we can use

pip install docker-compose to install it, and use its commands after installation docker-compose.The differences between Docker Container, Swarm, and Compose show in this picture.

Compose consists of multiple containers, which can be deployed as a whole to a single Docker host or Swarm host cluster.

Compose consists of multiple containers, which can be deployed as a whole to a single Docker host or Swarm host cluster.Let’s experience the functions of Docker Compose and design a service with the following containers

- The front-end load balancing server is played by HAProxy, and request will be forwarded to the next following two web containers

- Two web containers, demonstrated with Python Flask

- A Redis cache container, the Web will access the cached data in the container

The files involved in defining Docker Compose are Dockerfile and docker-compose.yml. We need to create a Dockerfile for each custom Docker container. If one container directly use the Docker image, the no Dockerfile required. In other words, there can be one or more Dockerfiles in a Compose. Here is an example of directory structure of one compose

myapp/If we need to use multiple Dockerfiles, the Dockerfile must be placed in a different directory. In this case, the directory structure needs to be adjusted as follows (plus several auxiliary files that will be used later)

- Dockerfile

- docker-compose.yml

myapp/Next, look at the content of each file

- proxy/

- Dockerfile

- haproxy.cfg

- web/

- Dockerfile

- app.py

- requirements.txt

- docker-compose.yml

myapp/proxy/Dockerfile

1FROM haproxy:2.1.3

2COPY haproxy.cfg /usr/local/etc/haproxy/haproxy.cfgmyapp/proxy/haproxy.cfg

1frontend myweb

2 bind *:80

3 default_backend realserver

4backend realserver

5 balance roundrobin

6 server web1 web_a:5000 check

7 server web2 web_b:5000 checkThis is the simplest haproxy.cfg configuration file. It just forwards the request to

web_a and web_b in a rotating manner. We will see web_a and web_b in the docker-compose.yml file later.myapp/web/Dockerfile

1FROM python:3.7-alpine

2ADD app.py requirements.txt ./

3RUN pip install -r requirements.txt

4CMD ["python", "app.py"]myapp/web/requirements.txt

1flask

2redismyapp / web / app.py

1import redis

2import socket

3from flask import Flask

4

5cache = redis.Redis('redis')

6app = Flask(__name__)

7

8

9def hit_count():

10 return cache.incr('hits')

11

12

13@app.route('/')

14def index():

15 count = hit_count()

16 return 'Served by <b>{}</b>, count: <b>{}</b>\n'.format(socket.gethostname(), count)

17

18

19if __name__ == '__main__':

20 app.run(host='0.0.0.0')Use a simple Flask App to demonstrate a web application. The access count is saved in Redis, and responds the hostname of the machine where the request is currently processed.

myapp/docker-compose.yml

1version: '3.7'

2services:

3 web_a:

4 build: ./web

5 ports:

6 - 5000

7 web_b:

8 build: ./web

9 ports:

10 - 5000

11 proxy:

12 build: ./proxy

13 ports:

14 - "80:80"

15 redis:

16 image: "redis:alpine"We have built two Docker images. Redis:alpine is used directly for the image. The port and mapping are defined here, so EXPOSE is needed to define the port number in the Dockerfile. We will start two web services, web_a, web_b, and the haproxy.cfg in front will forward the request to these two containers.

Now we can go to the myapp directory under the command line and execute the command

$ docker-compose up -d

If first time run, this command will also build Docker images for myapp_proxy, myapp_web_a, and myapp_web_b, and then they will be started. If those images already exist locally, start those containers directly. After the startup is complete, run

docker ps to check1$ docker ps

2CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3e5d918e0ae2a myapp_proxy "/docker-entrypoint.…" About a minute ago Up About a minute 0.0.0.0:80->80/tcp myapp_proxy_1

456819534cfa6 myapp_web_a "python app.py" About a minute ago Up About a minute 0.0.0.0:32777->5000/tcp myapp_web_a_1

5a2125855e55a myapp_web_b "python app.py" About a minute ago Up About a minute 0.0.0.0:32778->5000/tcp myapp_web_b_1

6f216a7b5f95b redis:alpine "docker-entrypoint.s…" About a minute ago Up About a minute 6379/tcp myapp_redis_1Four containers are all started, one haproxy, two web, and one redis, which is exactly what we need. Let’s verify the whole service behavior now:

1$ curl http://localhost

2Served by <b>a2125855e55a</b>, count: <b>1</b>

3$ curl http://localhost

4Served by <b>56819534cfa6</b>, count: <b>2</b>

5$ curl http://localhost

6Served by <b>a2125855e55a</b>, count: <b>3</b>Load balancing, web services, and Redis are working together as our intention.

If we run

docker kill 56 to get myapp_web_a killed, it can't be automatically recover. But running docker-compose run -d again will just save myapp_web_a back, won't impact other containers.1$ docker-compose up -d

2Starting myapp_web_a_1 ...

3myapp_redis_1 is up-to-date

4Starting myapp_web_a_1 ... doneplease run

docker-compose -h to learn the detailed usage of this command, and a lot of them are similar to docker command, following are only for docker-compose command- docker-compose images: Display the docker image used by current compose

- docker-compose logs: Display the running logs of the current compose

- docker-compose down: stop all containers involved in compose

- docker-compose kill: kill all the containers involved in compose without using docker kill to kill them one by one

- docker-compose restart: restart the entire compose service (all containers)

There is a simpler way to support HAProxy + multiple dynamic web containers

Modify the previous

docker-compose.ymlcontents of the file are as follows: 1version: '3.7'

2services:

3 web:

4 build: ./web

5 ports:

6 - 5000

7 proxy:

8 image: dockercloud/haproxy

9 links:

10 - web

11 ports:

12 - "80:80"

13 volumes:

14 - /var/run/docker.sock:/var/run/docker.sock

15 redis:

16 image: "redis:alpine"proxy selected

dockercloud/haproxy image, so, we can remove myapp/proxy folder along with Dockerfile and haproxy.cfg.Now start with the same command

docker-compose up -d1$ docker ps

2CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

33ce8fcd986ac dockercloud/haproxy "/sbin/tini -- docke…" 6 minutes ago Up 4 minutes 443/tcp, 0.0.0.0:80->80/tcp, 1936/tcp myapp_proxy_1

4c73be3d3f795 myapp_web "python app.py" 6 minutes ago Up 4 minutes 0.0.0.0:32793->5000/tcp myapp_web_1

50fabf6c6e81f redis:alpine "docker-entrypoint.s…" 16 minutes ago Up 4 minutes 6379/tcp myapp_redis_1There is only one web container, and now it can be dynamically expanded, using

docker-compose up --scale web=d -d 1$ docker-compose up --scale web=3 -d

2Starting myapp_web_1 ...

3Starting myapp_web_1 ... done

4Creating myapp_web_2 ... done

5Creating myapp_web_3 ... done

6myapp_proxy_1 is up-to-date

7$ docker ps

8CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9e3b4db8db92d myapp_web "python app.py" 7 seconds ago Up 5 seconds 0.0.0.0:32796->5000/tcp myapp_web_2

10f1ba850865e0 myapp_web "python app.py" 7 seconds ago Up 5 seconds 0.0.0.0:32795->5000/tcp myapp_web_3

113ce8fcd986ac dockercloud/haproxy "/sbin/tini -- docke…" 8 minutes ago Up 6 minutes 443/tcp, 0.0.0.0:80->80/tcp, 1936/tcp myapp_proxy_1

12c73be3d3f795 myapp_web "python app.py" 8 minutes ago Up 6 minutes 0.0.0.0:32793->5000/tcp myapp_web_1

130fabf6c6e81f redis:alpine "docker-entrypoint.s…" 17 minutes ago Up 6 minutes 6379/tcp myapp_redis_11$ curl http://localhost

2Served by <b>c73be3d3f795</b>, count: <b>20</b>

3$ curl http://localhost

4Served by <b>e3b4db8db92d</b>, count: <b>21</b>

5$ curl http://localhost

6Served by <b>f1ba850865e0</b>, count: <b>22</b>The three requests were processed by three different web containers.

Note:

docker-compose scale web=2: The command is not recommended, we should rundocker-compose up --scale web=2 -dinsteaddocker-compose.yml: The configurationdeploycan only work with Swarm mode. Whiledeployinreplicasworks withdocker stack deploycommand, and is ignored bydocker-compose upanddocker-compose run

About running Compose on the Swarm cluster

It seems a bit troublesome, see the official Use Compose with Swarm . We need to use docker stack to deploy Compose. We won't do the actual operation for now, and not sure if there are many such combination scenarios in a real production environment. The key is to understand how the containers are distributed among the Swarm nodes after deployment. Is Compose still kept as a whole? or extract containers from Compose to deploy to Swarm cluster?

From a picture in Deploy Docker Compose (v3) to Swarm (mode) Cluster

From above picture, Swarm does extract container from a Compose, then deploy each container to Swarm cluster instead of treating Compose as a whole.

From above picture, Swarm does extract container from a Compose, then deploy each container to Swarm cluster instead of treating Compose as a whole.link:

- How to use Docker Compose to run complex multi container apps on your Raspberry Pi

- Docker Compose multi-container deployment (5)

- Introduction to Docker-Compose Installation and Use

[版权声明]

本文采用 署名-非商业性使用-相同方式共享 4.0 国际 (CC BY-NC-SA 4.0) 进行许可。

本文采用 署名-非商业性使用-相同方式共享 4.0 国际 (CC BY-NC-SA 4.0) 进行许可。